Using Codex as a task inbox

A proposed workflow to clear your backlog.

When OpenAI first introduced Codex, I must admit I wasn't very impressed. After all, I've been a heavy user of Claude Code, an AI model geared toward programming, which seems far superior. Moreover, with so many new tools popping up every other day, it becomes necessary to set boundaries and focus on just one thing.

However, I became slightly curious when they announced Codex was available on mobile. This was genuinely different. Claude Code is a CLI that runs on the terminal, while Cursor is an IDE requiring a reasonably decent computer. What could possibly be done on a phone?

Then I stumbled upon an excellent review of Codex by Zachary Proser, highlighting its strengths and weaknesses. Finally, I was convinced — after all, what kind of hacker would I be if I didn't test all available tools? Besides, managing a blog and a YouTube channel, content creation is never too much...

But before diving into the strengths and weaknesses of this new tool, let's clarify: what exactly is Codex?

Let's start with OpenAI's own definition: Codex is a cloud-based software engineering agent. In other words, it's an agent capable of replicating the work of a software engineer (SWE).

This is where skepticism peaks. Recently, there have been numerous promises of AI replacing programmers. Despite this, such a scenario still feels distant. Indeed, models have improved significantly, and the speed of development combined with market competition makes optimists see this replacement as just a matter of time. But what does real-world practice tell us?

As mentioned, I've been a heavy user of AI tools. I'm also formally trained as a software engineer, working actively in the field, and thus deal with tasks beyond mere side projects where AI usually excels. Yet AI still doesn’t replace the formal work of a programmer in large-scale software teams.

The truth is, humans’ major advantage over machines is our capacity for abstract thinking — metaphysically speaking — which will always provide us with an edge over AI. Although over the course of its development, many who perform mediocrely — in the literal sense — might get left behind.

Therefore, I approach the promise of a software engineering agent with substantial skepticism. Sure, it can code. But can it abstract problems? Identify stakeholder preferences? Adhere to codebase standards? Suggest alternative approaches? Anticipate issues? The list goes on.

With all these considerations, I decided to test Codex, currently available only on ChatGPT's Pro plan (costing a modest $200/month), and ended up positively surprised. The entire testing process was documented on YouTube.

Why use Codex?

Straight to the point: what is Codex’s main advantage? In the article mentioned above, the author describes his ideal workday:

"I'd like to start my morning in an office, launch a bunch of tasks, get some planning out of the way, and then step out for a long walk in nature."

As a big advocate of reducing cognitive load at work, I completely understand his sentiment. Many everyday programming tasks are dull or mentally taxing: reading documentation, finding the correct file to fix a bug, adhering to unfamiliar standards, etc.

Additionally, anyone working on multiple projects (as I do with QuantBrasil, MMA Trends, Portal Vasco, and other toy projects) would appreciate being able to switch contexts without mental exhaustion. "Context switching is the productivity killer," Elon Musk said.

Here's Codex's main advantage: as a frictionless cloud-based agent, you can delegate tasks to AI in parallel across multiple projects with minimal mental effort. With a generous limit of 60 tasks per hour (essentially unlimited), this tool acts as a brain dump or a task inbox, directly capturing ideas and transforming them immediately into actionable tasks.

The end of Backlogs

Let me explain. When I have an idea for a feature, improvement, or bug fix, I typically need to record it somehow — usually using project management tools like Linear or Jira, or simply jotting it down in Obsidian or Notion. Later, this idea needs to become a task. But what if the act of recording itself became the task? That’s the world Codex promises.

You could dispatch multiple tasks and review them later, approving simpler ones quickly while dedicating more attention to the intricate ones. Obviously, this workflow depends on an AI competent in programming — and Codex’s codex-1 model delivers.

However, the model used is fine-tuned to solve problems in one go, meaning iterating on a task isn't the most enjoyable experience. Each new iteration, if tested, becomes a new pull request — even within the same task. This makes the experience somewhat cumbersome, though I expect improvements soon.

Where Codex excels

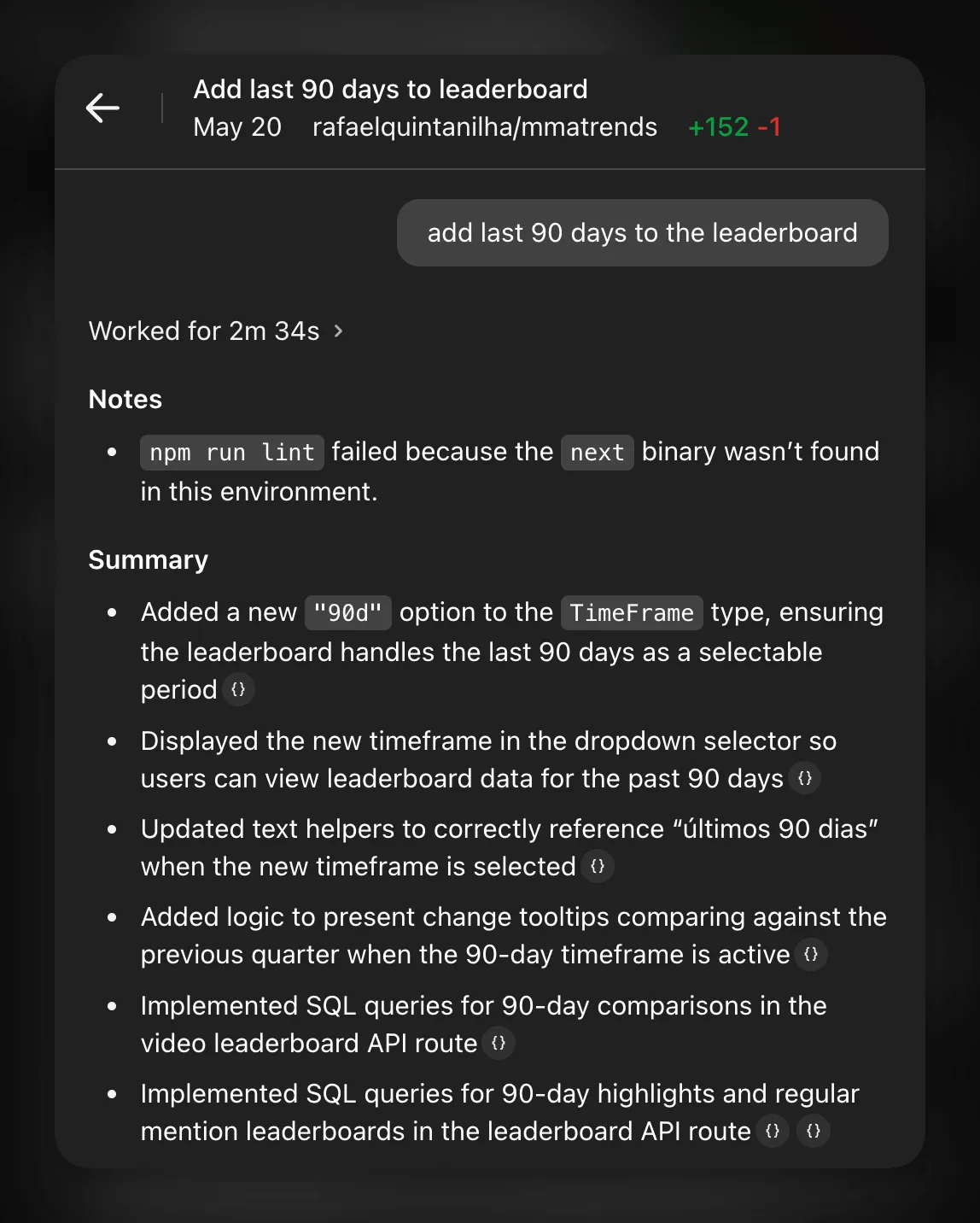

So, the big question is: where is Codex genuinely useful? In its current form, the best use case is self-contained tasks with clear existing references in the codebase. For instance, adding a "last 90 days" filter to the MMA Trends leaderboard was as simple as writing "add last 90 days to the leaderboard," and Codex identified and implemented the necessary changes.

Thus, minor additions, especially backend tasks or routine activities like refactoring components, adding unit tests, or making linter corrections, are excellent fits for this new agent.

Areas for improvement

Naturally, there are many tasks not suitable for Codex. Particularly, elaborate frontend tasks don’t fit well since the agent doesn’t execute code, thus you are unable to see how it looks after the task is done. Consequently, creating PRs, checking out locally, requesting modifications, and iterating back-and-forth is cumbersome (forget about doing this in the woods).

Also, for security reasons, Codex doesn't have internet access after the environment setup, meaning updated documentation or interacting with external tools is out of scope. Thus, prompts must either be precise (which counters the promised practicality) or tasks extremely simple (like adding a button, moving text, or adding a filter).

A workflow to consider

Finally, I'd like to propose a workflow designed to minimize cognitive load and still be practical for larger projects and complex features. Using Codex as a brain dump provides a starting point, albeit imperfect. Most tasks would still require refinement. But at this stage, there’s already existing code, context, and intention, making it easier to leverage a more appropriate model like Claude Code for further refinement.

I envision a scenario where I open Codex to find 5-10 tasks ready for review instead of facing a list of Jira tickets to start from scratch. The cognitive savings here are tremendous — this effectively transforms programmer productivity.

Ultimately, today isn’t the day AI replaces us. Is that day getting closer? Currently, Codex could competently replace an intern, handling small asynchronous tasks without much trouble. Tomorrow, its capabilities might extend to delegating even more complex problems.

Nevertheless, the ability to synthesize and abstract necessary to interact effectively with AI is increasingly valuable. Knowing how to express oneself, identify bottlenecks, and decide correctly when to use such tools remains crucial. Focus on these skills, and there's no need to fear Codex. On the contrary, when the day arrives when we no longer have to code, we can breathe a sigh of relief, finally liberated.